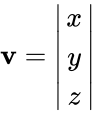

When you're doing math for graphics, physics, games, or whatever, you should use column vectors when you're representing points, differences between points, and the like.

Symbolically, you should do this: , and do matrix-times-vector like this: v' = Mv, not v' = vM.

Math

Getting your matrix and vector shapes correct is vital to doing more advanced mathematics, especially if you're referring to published mathematical materials, all of which will use columns for vectors, and reserve rows for gradients, differential forms, covariant tensors, and the like. My lecture on vector calculus gives a ton of examples of why it's important to get your matrix shapes correct, and why a vector must be a column, not a row. Here is a single simple example...

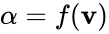

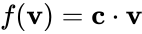

Say you have a scalar function of a vector:

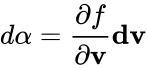

This might be something as simple as a dot product with a constant vector, , or something really complicated. If you differentiate this symbolically, you get:

The shape of dv is the same shape as the vector v. But, what is the shape of ? It is to the left of the vector, and their adjacency (multiplication) produces a scalar. Note that this is simple adjacency, there is no implicit dot product or wacky operator between the partial and the vector, they are just multiplied times each other left to right using normal linear algebra rules. So, what does this say about the shapes? Either the vector is a column, and the partial then must be a row, so the adjacency is the simple matrix product of an 1 x n matrix (row vector) times an n x 1 matrix (column vector), which produces a 1 x 1 matrix (a scalar), or, if you insist that dv is a row vector, then tensor insanity ensues and you are forced to come up with some object that when placed to the left of a row vector can multiply it to produce a scalar. That way lies madness, while if you just make sure you are using vectors as columns, and forms as rows, it all just works trivially (until you get to differentiating vector functions of vectors, and then you sometimes have to involve 3-tensors or finesse the notation no matter what you do, but that's a separate topic).

Using column vectors is not at all controversial in the fields of mathematics and physics, but in graphics, due to historical reasons[1], it's still an issue. In fact, it's probably true that most of the game math libraries out there do v' = vM, which saddens me greatly, but hopefully this is changing.

Code

Be careful when coding up vector libraries, however. It's often tempting to try to use the type system of your language to differentiate between column and row vectors, but I think this is rarely worthwhile. You can do it fairly easily, but it's often more trouble than it's worth—I've found the knee of the complexity/utility curve is to just have a vector type, and do matrix-times-vector as v' = Mv. If you want to do a vector-times-matrix multiply with a row vector, use a named function call instead of an operator, because it's a much less common situation. Often 90% of them are from vTMv operations, in which case you can express this as DotProduct(v,M*v) easily.

The same advice goes for keeping vectors and points separate types. There are just too many circumstances when you want to convert between points and vectors, or take transposes to convert between rows and columns. You still want to keep rows and columns separate in your notation and your variable naming, I've just never seen it pay off when trying to enforce it in code with the type system in your main game programming language, like C++. This is definitely a coding style issue, and so it's subjective, of course, and I'm sure some people have found it's useful. For mathematical programming languages and systems, like Mathematica, I definitely do think it's a good idea.

Some people argue that SSE and other vector floating point units make it so using a column major layout is more efficient, but that has nothing to do with your notational conventions, both on paper and in code. Yes, you might end up storing matrices in column-major (Fortran) order if you're really concerned about this, but you should still do v' = Mv in code, or else risk going insane as you use more advanced math.

Links

Some other pages on the topic:

- ↑ My understanding of the history: The 1st edition of the classic graphics text book by Foley and van Dam used row vectors and vector-times-matrix. Iris GL followed suit. They both later realized their mistake relative to all of "real" mathematics and physics, and switched to column vectors and matrix-times-vector, as God intended. F&vD switched in their 2nd Edition, and when OpenGL was created from Iris GL it was switched notationally as well. However, since SGI wanted to keep binary matrix layout compatibility between OpenGL and Iris GL, they decided that the OpenGL matrices would be specified in Fortran column-major order instead of C row-major order, prolonging the pain and confusion.

, and do matrix-times-vector like this: v' = Mv, not v' = vM.

, and do matrix-times-vector like this: v' = Mv, not v' = vM.

, or something really complicated. If you differentiate this symbolically, you get:

, or something really complicated. If you differentiate this symbolically, you get:

? It is to the left of the vector, and their adjacency (multiplication) produces a scalar. Note that this is simple adjacency, there is no implicit dot product or wacky operator between the partial and the vector, they are just multiplied times each other left to right using normal linear algebra rules. So, what does this say about the shapes? Either the vector is a column, and the partial then must be a row, so the adjacency is the simple matrix product of an 1 x n matrix (row vector) times an n x 1 matrix (column vector), which produces a 1 x 1 matrix (a scalar), or, if you insist that dv is a row vector, then tensor insanity ensues and you are forced to come up with some object that when placed to the left of a row vector can multiply it to produce a scalar. That way lies madness, while if you just make sure you are using vectors as columns, and forms as rows, it all just works trivially (until you get to differentiating vector functions of vectors, and then you sometimes have to involve 3-tensors or finesse the notation no matter what you do, but that's a separate topic).

? It is to the left of the vector, and their adjacency (multiplication) produces a scalar. Note that this is simple adjacency, there is no implicit dot product or wacky operator between the partial and the vector, they are just multiplied times each other left to right using normal linear algebra rules. So, what does this say about the shapes? Either the vector is a column, and the partial then must be a row, so the adjacency is the simple matrix product of an 1 x n matrix (row vector) times an n x 1 matrix (column vector), which produces a 1 x 1 matrix (a scalar), or, if you insist that dv is a row vector, then tensor insanity ensues and you are forced to come up with some object that when placed to the left of a row vector can multiply it to produce a scalar. That way lies madness, while if you just make sure you are using vectors as columns, and forms as rows, it all just works trivially (until you get to differentiating vector functions of vectors, and then you sometimes have to involve 3-tensors or finesse the notation no matter what you do, but that's a separate topic).