In my recent lecture, Achievements Considered Harmful?, I ranted briefly about what I call metrics fetishism, and how's it's taking over game design and how we should be careful.

In the old days, people designed games by their instinct and intuition. Notice I didn't say the "good old days", it was just how things were done. If you wanted feedback, you'd playtest the game, and watch the players struggle with the controls, or waltz through some section you thought was hard, or whatever. Then you'd iterate.

More recently, as computers got networked and more powerful, we started recording metrics about how players played our games, and feeding that back into the design process. We dump a lot of information to a database, and then we mine that information for clues about how to optimize certain aspects of our games, whether it's "fun" or "ARPU" or whatever. This happens during development, and now that a lot of games are online, it happens after we ship, with the design being constantly iterated based on metrics gathered live after launch.

Now, metrics and closed feedback loops are great in the abstract, but like any tool, they can be misused. Just as it's usually a bad idea to design a game based totally on your intuition without grounding that intuition in the cold hard reality of another human being touching the controls, it's also bad to blindly gather and follow metrics data.

In the old days, we erred too far on the intuition side of the equation, but now I fear we're erring too far on the metrics side of the equation. This is what I call metrics fetishism.

The problem is not that the data is wrong[1], the problem is that we tend to gather the data that is convenient to gather, we worship that data because it is at least some concrete port in the storm of game design and player behavior, and then we assume we can take a reasonable derivative from that data and hill-climb to a better place in design-space.

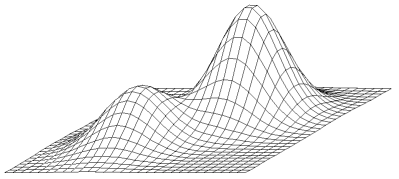

But, as anybody who knows any math can tell you, hill-climbing is not a very good algorithm for optimizing complex functions, and game design is a very complex function indeed. The problem with hill-climbing is it can only see local maxima, it doesn't have any visibility into where the global maxima lie in the space. If you blindly hill-climb, you will end up at the top of the nearest hill, terrified to move because all derivatives point down, while the giant mountain of game design awesomeness is sitting right over there.

We really want a mix of intuition and metrics. The intuition gets you out of local maxima, and yes, it sometimes makes things worse for a bit, but it's our only hope for finding higher hills. In a globally optimizing algorithm like simulated annealing, the intuition is the equivalent of turning up the temperature so you jump around in state space. The metrics allow you to polish those new points to perfection, but metrics alone won't find points worth polishing...you need to trust your intuition for that.

The metrics are the craft, and the intuition is the art.

- ↑ Assuming there aren't bugs in the metrics code instrumentating the game code, of course, which is probably not a great assumption, but anyway...